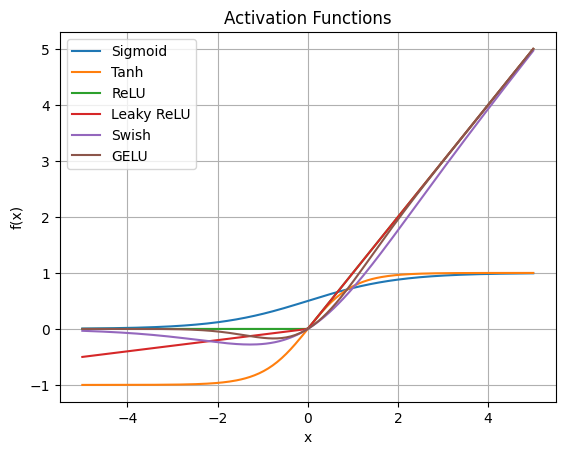

import numpy as np

import matplotlib.pyplot as plt

from scipy.special import erf

# x軸の値を用意

x = np.linspace(-5, 5, 200)

# 各活性化関数を定義

def sigmoid(x):

return 1 / (1 + np.exp(-x))

def tanh(x):

return np.tanh(x)

def relu(x):

return np.maximum(0, x)

def leaky_relu(x, alpha=0.1):

return np.where(x > 0, x, alpha * x)

def swish(x):

return x * sigmoid(x)

def gelu(x):

return 0.5 * x * (1 + erf(x / np.sqrt(2)))

# 計算

y_sigmoid = sigmoid(x)

y_tanh = tanh(x)

y_relu = relu(x)

y_leaky_relu = leaky_relu(x)

y_swish = swish(x)

y_gelu = gelu(x)

# プロット

plt.plot(x, y_sigmoid, label='Sigmoid')

plt.plot(x, y_tanh, label='Tanh')

plt.plot(x, y_relu, label='ReLU')

plt.plot(x, y_leaky_relu, label='Leaky ReLU')

plt.plot(x, y_swish, label='Swish')

plt.plot(x, y_gelu, label='GELU')

plt.xlabel('x')

plt.ylabel('f(x)')

plt.title('Activation Functions')

plt.legend()

plt.grid(True)

plt.show()